Astrophysical Fluid Dynamics

2008 – 2010

Organisers

Oscar Agertz, Peter Engelmeier, Elena D’Onghia, George Lake, Lucio Mayer, Francesco Miniati, Ben Moore, Wes Peterson, Justin Read, Uros Seljak, Joachim Stadel, Romain Teyssier

Summary

Understanding the formation of cosmic structures, from planets to stars to galaxies, is a complex non-linear process that can be tackled using computational techniques. Central to these research topics is our ability to follow the dynamics of the gaseous component as it cools, radiates and condenses during structure formation. We are leading an international effort to critically test and compare existing numerical techniques and to discuss new algorithms in readiness for the next generation petaflop supercomputers. This program will bring world renowned experts to Zurich to collaborate together, culminating in a workshop and conference that will bring experts from across the world to discuss the future of this topic.

Context & Scientific Background

The past decade has proved to be an exciting time in cosmology. A combination of satellite and ground based observational missions have measured the fundamental parameters that govern the evolution of the Universe. The matter and energy densities, the expansion rate and primordial power spectrum are now well constrained – the initial conditions from which structure develops are known, however, it is not understood how the matter in the universe arranges itself into galaxies, stars and planetary systems. These are amongst the main problems facing cosmologists today and are central to what our research activity is concentrating on - we seek to understand how cosmic structures arise from these initial conditions. During this process the density of matter increases by a factor larger than 10^9 over length scales that must be simultaneously modelled from megaparsecs to the scale of individual stars and planets and dynamical timescales from the Hubble time to years.

The current paradigm is that galaxies (and subsequently stars and planets) form from fluctuations in the matter distribution that grow through the gravitational instability and subsequent hierarchical merging and accretion of dark matter structures. It is on non-linear scales that the nature of dark matter manifests itself leading to distinct observational signatures. The Universe became re-ionised at redshifts z~10, possibly by the first stars. After a process of shock heating through multiple mergers the gas begins to cool rapidly within the most massive dark matter halos attaining high densities and possibly forming rotationally supported disks. Star-formation and merging between these “proto-galaxies” produce the spheroid components of galaxies, whereas continued accretion of gas forms the disk systems that we are most familiar with today. Turbulent processes within the interstellar medium lead to the formation of transient molecular clouds that further fragment into numerous cold cores, forming stars with their associated proto-planetary debris disks.

Our theoretical understanding is some way behind the stream of quality observational data that comes from ground and space based facilities around the world. One of the reasons why theoreticians are playing “catch up” is that the investment in observational facilities is about two orders of magnitude more than invested in theoretical research. Whilst theorists are still trying to understand how galaxies assemble themselves from the dark matter and baryons in the Universe, observational astronomers have exquisite date in multi-wavelengths for a million galaxies. They have high resolution spectral information, element abundances, colour maps and kinematical data for individual galaxies. Theorists have not yet succeeded in making a single realistic galaxy via direct simulation. We don’t know how the baryons collect at the centres of galaxies and we still do not have a good idea of how individual stars form.

Similar problems exist on other astrophysics scales. Astronomers are cataloguing the properties of numerous extra-solar planetary systems. Theorists have yet to form a single realistic solar system via computational techniques – we are still debating the basic mechanism of giant planet formation. To re-address this imbalance many research groups are carrying out an extensive numerical simulation program that attempt to follow the evolution of the universe since the big bang to the present day, on different scales and within different astronomical contexts. This requires high performance parallel codes that can simultaneously follow the collisionless evolution of the dark matter particles and the collisional evolution of the baryons, shock heating, radiative cooling, radiative transfer and ultimately magneto-hydrodynamics (MHD).

In the past few years, numerical simulations have helped shape our understanding of fundamental topics ranging from the origin of planetary systems, the formation and evolution of galaxies, to the theory and development of the large scale structure of the Universe. Simulations are the ideal means by which to relate theoretical models with observational data and advances in algorithms and supercomputer technology have provided the platform for increasingly realistic astrophysical modelling. The dynamical range in time, mass and space that needs to be followed often exceeds that found in other computational science problems. Indeed, the adaptive multi-grid or Lagrangian SPH codes developed for astrophysics research are among the most sophisticated in the world. The numerical techniques developed for astrophysics find their way into other disciplines; algorithms such as TREES and SPH have been applied to biological and industrial sectors as diverse as protein folding calculations, automobile crash modelling and visual effects in movies such as Lord of the Rings.

Computational Astrophysics in Zurich

Members of the Institute for Theoretical Physics have played the main role in developing GASOLINE, the smooth particle hydrodynamics (SPH) extension to PKDGRAV (author Joachim Stadel) which allows us to simulate the baryonic component, or gas, as well as the collisionless dark matter in our simulations. Furthermore, it includes the physics of radiative cooling, star formation and the feedback of energy from supernova explosions. Development of this codes is a continued process - several major enhancements to GASOLINE are planned, including; speeding up the domain decomposition, accurate and fast cooling functions, a scheme for radiative transfer, local tree repair and new integration and multi-stepping schemes. For certain problems we require a new hybrid code that can follow the collisional evolution of stars and black holes within collisionless dark matter structures. We are exploring GRAPE hardware and regularisation techniques to be embedded within our existing parallel code. With these extensions to GASOLINE we would be able to follow the growth of super-massive black holes in the centres of galaxies and the collisional evolution of the early solar system. Code development, testing and the design of parallel visualisation and analysis tools is an important aspect of our research.

We design and maintain our own supercomputing facilities: in 2002 we installed one of the largest supercomputers in Switzerland, the zbox 1 which has led to well over a hundred publications. This novel high density machine was recently upgraded to 144 quad core cpus with over a terabyte of ram and 100 terabytes of disk store. In collaboration with other computational research groups at the MNF we installed the zbox2 in 2006 – a 500 processor supercomputer that was co-funded by the SNF and the University of Zurich.

The large five year European program in computational astrophysics, AstroSim (P.I. Moore), was successfully established (external page www.astrosim.net) with funding from 12 European countries. Over sixty institutes in 16 countries with over 200 active computational researchers co-signed this application demonstrating the size and significance of this field within Europe. This program aims to bring together the diverse community of computational researchers working across the various subfields in astrophysics.

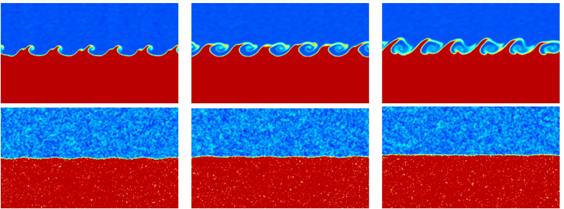

As an illustration of our code and algorithm testing program, we have recently questioned the ability of SPH methods to capture basic gaseous hydrodynamics. Agertz et al (2007) showed that the two commonly used techniques show striking differences in their ability to model processes that are fundamentally important across many areas of astrophysics. Whilst Eulerian grid based methods are able to resolve and treat important dynamical instabilities, such as Kelvin-Helmholtz or Rayleigh-Taylor, these processes are poorly or not at all resolved by existing SPH techniques (See the below Figure). We found that the reason for this is that SPH, at least in its standard implementation, introduces spurious pressure forces on particles in regions where there are steep density gradients. This results in a boundary gap of the size of the SPH smoothing kernel over which information is not transferred.

In order to carry out realistic simulations of cosmic structure formation we wish to thoroughly test and compare existing algorithms and techniques for following astrophysical fluids and plasmas. We also wish to prepare and discuss the programming challenges that await us when we have access to supercomputers with over 10,000 cpus. In order to follow the large range of dynamical scales we need to take full advantage of the memory and processing speed of forthcoming hardware which will be available for our research in the next couple of years. This program in computational fluid dynamics aims to bring together the expertise and to create the environment necessary to meet these challenges.